Singing your songs with Singing speech synthesis – A new experience to be invented.

In early 2014, experts from Acapela Group, innovator in speech solutions, started to work on a 3-year program on French singing text to speech. An ambitious project funded by the ANR (The French National Research Agency) and involving high profile partners: LIMSI (coordinator), IRCAM and DUALO.

‘We aim to innovate and push the boundaries of speech technologies to offer voices that sound authentic and bring meaning and intent as well as investigate new territories of application. Inventing a system that allow to our voices to sing is a very interesting and exciting challenge for us and we look forward the new usage that will come from this project and the new artistic means that it will bring to musicians of all profile and styles, amateurs and professionals’ says Lars-Erik Larsson, CEO of Acapela Group.

The goal of the ChaNTeR (Chant Numérique Temps-Réel in French : Digital Real time singing) project is to create a high quality system for synthesizing songs that can be used by the general public. The system will sing the words of a song and the synthesizer imagined will work in two modes ‘song from text’ or ‘virtual singer’. In the first mode, the user can enter a text to be sung along with a score (times and pitches), and the machine will transform it into sound. In the second one, the ‘virtual singer’ mode, the user controls the song synthesizer in real-time via specific interfaces, just like playing an instrument.

To achieve this synthesizer, the project will combine advanced voice transformation techniques, including analysis and processing of the parameters of the vocal tract and the glottal source, with state of the art know how about unit selection for speech synthesis, rules based singing synthesis systems, and innovative gesture control interfaces. The project focuses on capturing and reproducing a variety of vocal styles (e.g. lyrical/classical, popular/song).

A prototype system for singing synthesis will be developed to be used by projects partners to offer synthesized singing voice and singing instrument products that are currently lacking, or to improve the functions of currently existing products. The project will offer musicians and performers a new artistic approach to synthesized song, new means of creation that make interactive experiences with a sung voice possible.

The first recordings were made in March with artists Marlene Schaft and Raphaël Treiner who have performed songs in different repertoire.

Those recordings will be used as a basis to build up the TTS system, in coordination with the IRCAM and the LIMSI labs.

The TTS synthetizer will be implemented in the Dualo system – which purpose is to revolutionize musical practice with a new generation of music instruments based on a new arrangement of notes which is original and patented, the dualo principle.

IRCAM will contribute with research and development in singing voice transformation and concatenative synthesis.

LIMSI will contribute with research and development in gesture-controlled singing instruments, based on the Chorus Digitalis project.

More information about the project’s players

Dualo system

Latest news

Say Hello to 8 New AI Child Voices! Expanding Choice and Diversity

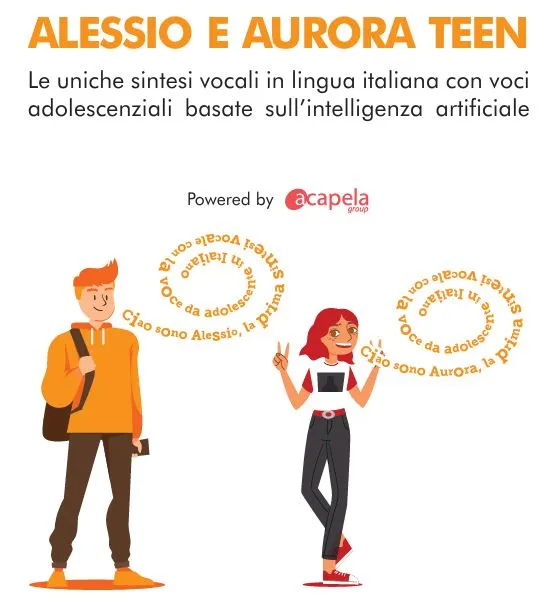

AI Digital Voices for Italian Adolescents

Acapela Group expands inclusive new German digital child voice options